Далее будет представлено максимально простое объяснение того, как работают нейронные сети, а также показаны способы их реализации в Python. Приятная новость для новичков – нейронные сети не такие уж и сложные. Термин нейронные сети зачастую используют в разговоре, ссылаясь на какой-то чрезвычайно запутанный концепт. На деле же все намного проще.

Данная статья предназначена для людей, которые ранее не работали с нейронными сетями вообще или же имеют довольно поверхностное понимание того, что это такое. Принцип работы нейронных сетей будет показан на примере их реализации через Python.

Содержание статьи

- Создание нейронных блоков

- Простой пример работы с нейронами в Python

- Создание нейрона с нуля в Python

- Пример сбор нейронов в нейросеть

- Пример прямого распространения FeedForward

- Создание нейронной сети прямое распространение FeedForward

- Пример тренировки нейронной сети — минимизация потерь, Часть 1

- Пример подсчета потерь в тренировки нейронной сети

- Python код среднеквадратической ошибки (MSE)

- Тренировка нейронной сети — многовариантные исчисления, Часть 2

- Пример подсчета частных производных

- Тренировка нейронной сети: Стохастический градиентный спуск

- Создание нейронной сети с нуля на Python

Создание нейронных блоков

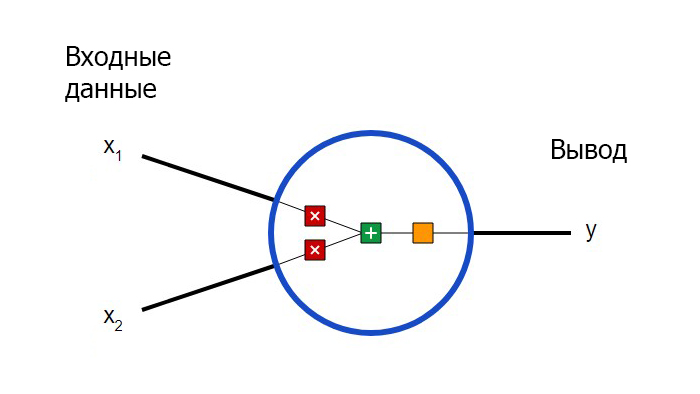

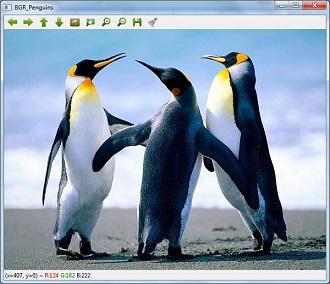

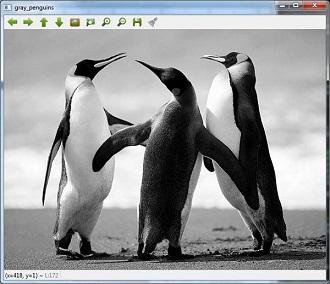

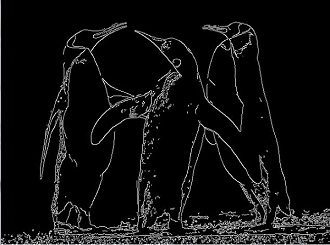

Для начала необходимо определиться с тем, что из себя представляют базовые компоненты нейронной сети – нейроны. Нейрон принимает вводные данные, выполняет с ними определенные математические операции, а затем выводит результат. Нейрон с двумя входными данными выглядит следующим образом:

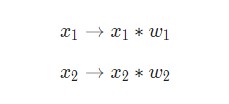

Здесь происходят три вещи. Во-первых, каждый вход умножается на вес (на схеме обозначен красным):

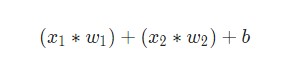

Затем все взвешенные входы складываются вместе со смещением b (на схеме обозначен зеленым):

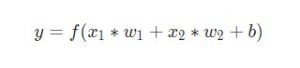

Наконец, сумма передается через функцию активации (на схеме обозначена желтым):

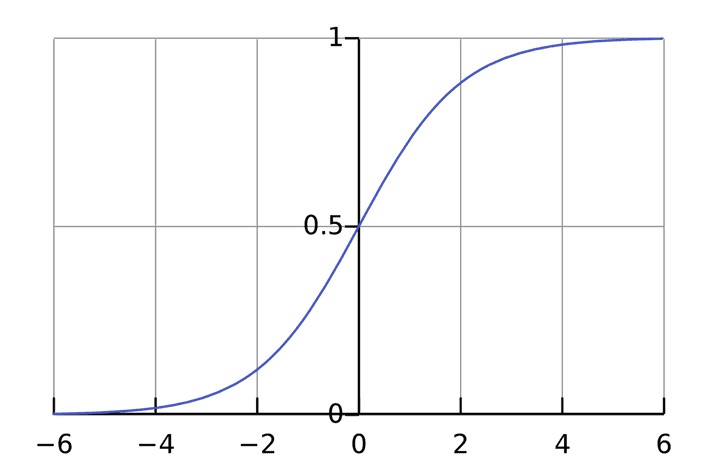

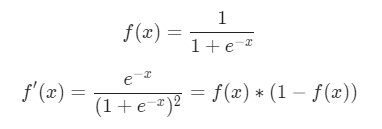

Функция активации используется для подключения несвязанных входных данных с выводом, у которого простая и предсказуемая форма. Как правило, в качестве используемой функцией активации берется функция сигмоида:

Функция сигмоида выводит только числа в диапазоне (0, 1). Вы можете воспринимать это как компрессию от (−∞, +∞) до (0, 1). Крупные отрицательные числа становятся ~0, а крупные положительные числа становятся ~1.

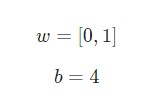

Предположим, у нас есть нейрон с двумя входами, который использует функцию активации сигмоида и имеет следующие параметры:

w = [0,1] — это просто один из способов написания w1 = 0, w2 = 1 в векторной форме. Присвоим нейрону вход со значением x = [2, 3]. Для более компактного представления будет использовано скалярное произведение.

С учетом, что вход был x = [2, 3], вывод будет равен 0.999. Вот и все. Такой процесс передачи входных данных для получения вывода называется прямым распространением, или feedforward.

Создание нейрона с нуля в Python

Есть вопросы по Python?

На нашем форуме вы можете задать любой вопрос и получить ответ от всего нашего сообщества!

Telegram Чат & Канал

Вступите в наш дружный чат по Python и начните общение с единомышленниками! Станьте частью большого сообщества!

Паблик VK

Одно из самых больших сообществ по Python в социальной сети ВК. Видео уроки и книги для вас!

Приступим к имплементации нейрона. Для этого потребуется использовать NumPy. Это мощная вычислительная библиотека Python, которая задействует математические операции:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 |

import numpy as np def sigmoid(x): # Наша функция активации: f(x) = 1 / (1 + e^(-x)) return 1 / (1 + np.exp(—x)) class Neuron: def __init__(self, weights, bias): self.weights = weights self.bias = bias def feedforward(self, inputs): # Вводные данные о весе, добавление смещения # и последующее использование функции активации total = np.dot(self.weights, inputs) + self.bias return sigmoid(total) weights = np.array([0, 1]) # w1 = 0, w2 = 1 bias = 4 # b = 4 n = Neuron(weights, bias) x = np.array([2, 3]) # x1 = 2, x2 = 3 print(n.feedforward(x)) # 0.9990889488055994 |

Узнаете числа? Это тот же пример, который рассматривался ранее. Ответ полученный на этот раз также равен 0.999.

Пример сбор нейронов в нейросеть

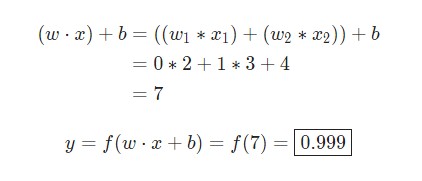

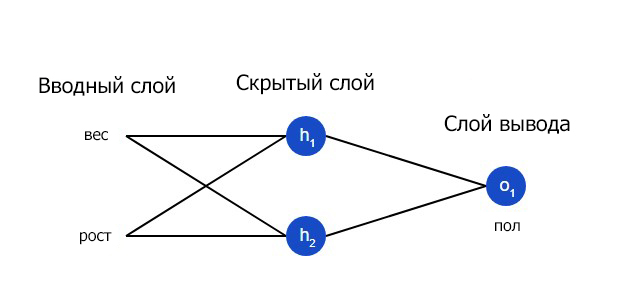

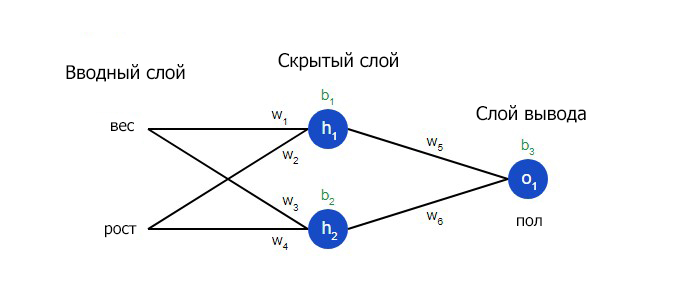

Нейронная сеть по сути представляет собой группу связанных между собой нейронов. Простая нейронная сеть выглядит следующим образом:

На вводном слое сети два входа – x1 и x2. На скрытом слое два нейтрона — h1 и h2. На слое вывода находится один нейрон – о1. Обратите внимание на то, что входные данные для о1 являются результатами вывода h1 и h2. Таким образом и строится нейросеть.

Скрытым слоем называется любой слой между вводным слоем и слоем вывода, что являются первым и последним слоями соответственно. Скрытых слоев может быть несколько.

Пример прямого распространения FeedForward

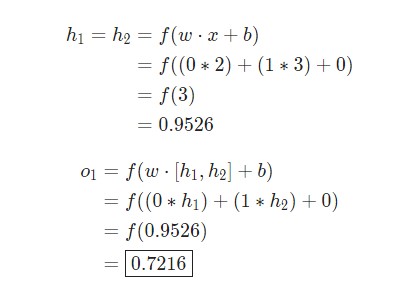

Давайте используем продемонстрированную выше сеть и представим, что все нейроны имеют одинаковый вес w = [0, 1], одинаковое смещение b = 0 и ту же самую функцию активации сигмоида. Пусть h1, h2 и o1 сами отметят результаты вывода представленных ими нейронов.

Что случится, если в качестве ввода будет использовано значение х = [2, 3]?

Результат вывода нейронной сети для входного значения х = [2, 3] составляет 0.7216. Все очень просто.

Нейронная сеть может иметь любое количество слоев с любым количеством нейронов в этих слоях.

Суть остается той же: нужно направить входные данные через нейроны в сеть для получения в итоге выходных данных. Для простоты далее в данной статье будет создан код сети, упомянутая выше.

Создание нейронной сети прямое распространение FeedForward

Далее будет показано, как реализовать прямое распространение feedforward в отношении нейронной сети. В качестве опорной точки будет использована следующая схема нейронной сети:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 |

import numpy as np # … Здесь код из предыдущего раздела class OurNeuralNetwork: «»» Нейронная сеть, у которой: — 2 входа — 1 скрытый слой с двумя нейронами (h1, h2) — слой вывода с одним нейроном (o1) У каждого нейрона одинаковые вес и смещение: — w = [0, 1] — b = 0 «»» def __init__(self): weights = np.array([0, 1]) bias = 0 # Класс Neuron из предыдущего раздела self.h1 = Neuron(weights, bias) self.h2 = Neuron(weights, bias) self.o1 = Neuron(weights, bias) def feedforward(self, x): out_h1 = self.h1.feedforward(x) out_h2 = self.h2.feedforward(x) # Вводы для о1 являются выводами h1 и h2 out_o1 = self.o1.feedforward(np.array([out_h1, out_h2])) return out_o1 network = OurNeuralNetwork() x = np.array([2, 3]) print(network.feedforward(x)) # 0.7216325609518421 |

Мы вновь получили 0.7216. Похоже, все работает.

Пример тренировки нейронной сети — минимизация потерь, Часть 1

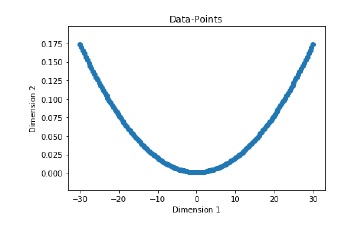

Предположим, у нас есть следующие параметры:

| Имя/Name | Вес/Weight (фунты) | Рост/Height (дюймы) | Пол/Gender |

| Alice | 133 | 65 | F |

| Bob | 160 | 72 | M |

| Charlie | 152 | 70 | M |

| Diana | 120 | 60 | F |

Давайте натренируем нейронную сеть таким образом, чтобы она предсказывала пол заданного человека в зависимости от его веса и роста.

Мужчины Male будут представлены как 0, а женщины Female как 1. Для простоты представления данные также будут несколько смещены.

| Имя/Name | Вес/Weight (минус 135) | Рост/Height (минус 66) | Пол/Gender |

| Alice | -2 | -1 | 1 |

| Bob | 25 | 6 | 0 |

| Charlie | 17 | 4 | 0 |

| Diana | -15 | -6 | 1 |

Для оптимизации здесь произведены произвольные смещения

135и66. Однако, обычно для смещения выбираются средние показатели.

Потери

Перед тренировкой нейронной сети потребуется выбрать способ оценки того, насколько хорошо сеть справляется с задачами. Это необходимо для ее последующих попыток выполнять поставленную задачу лучше. Таков принцип потери.

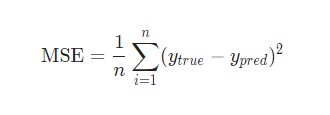

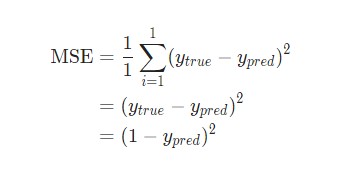

В данном случае будет использоваться среднеквадратическая ошибка (MSE) потери:

Давайте разберемся:

n– число рассматриваемых объектов, которое в данном случае равно 4. ЭтоAlice,Bob,CharlieиDiana;y– переменные, которые будут предсказаны. В данном случае это пол человека;ytrue– истинное значение переменной, то есть так называемый правильный ответ. Например, дляAliceзначениеytrueбудет1, то естьFemale;ypred– предполагаемое значение переменной. Это результат вывода сети.

(ytrue - ypred)2 называют квадратичной ошибкой (MSE). Здесь функция потери просто берет среднее значение по всем квадратичным ошибкам. Отсюда и название ошибки. Чем лучше предсказания, тем ниже потери.

Лучшие предсказания = Меньшие потери.

Тренировка нейронной сети = стремление к минимизации ее потерь.

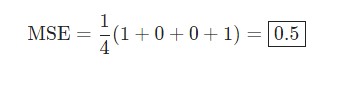

Пример подсчета потерь в тренировки нейронной сети

Скажем, наша сеть всегда выдает 0. Другими словами, она уверена, что все люди — Мужчины. Какой будет потеря?

| Имя/Name | ytrue | ypred | (ytrue — ypred)2 |

| Alice | 1 | 0 | 1 |

| Bob | 0 | 0 | 0 |

| Charlie | 0 | 0 | 0 |

| Diana | 1 | 0 | 1 |

Python код среднеквадратической ошибки (MSE)

Ниже представлен код для подсчета потерь:

|

import numpy as np def mse_loss(y_true, y_pred): # y_true и y_pred являются массивами numpy с одинаковой длиной return ((y_true — y_pred) ** 2).mean() y_true = np.array([1, 0, 0, 1]) y_pred = np.array([0, 0, 0, 0]) print(mse_loss(y_true, y_pred)) # 0.5 |

При возникновении сложностей с пониманием работы кода стоит ознакомиться с quickstart в NumPy для операций с массивами.

Тренировка нейронной сети — многовариантные исчисления, Часть 2

Текущая цель понятна – это минимизация потерь нейронной сети. Теперь стало ясно, что повлиять на предсказания сети можно при помощи изменения ее веса и смещения. Однако, как минимизировать потери?

В этом разделе будут затронуты многовариантные исчисления. Если вы не знакомы с данной темой, фрагменты с математическими вычислениями можно пропускать.

Для простоты давайте представим, что в наборе данных рассматривается только Alice:

| Имя/Name | Вес/Weight (минус 135) | Рост/Height (минус 66) | Пол/Gender |

| Alice | -2 | -1 | 1 |

Затем потеря среднеквадратической ошибки будет просто квадратической ошибкой для Alice:

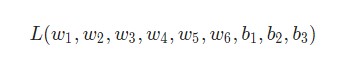

Еще один способ понимания потери – представление ее как функции веса и смещения. Давайте обозначим каждый вес и смещение в рассматриваемой сети:

Затем можно прописать потерю как многовариантную функцию:

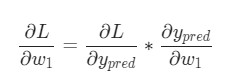

Представим, что нам нужно немного отредактировать w1. В таком случае, как изменится потеря L после внесения поправок в w1?

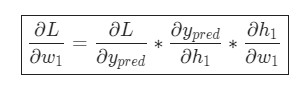

На этот вопрос может ответить частная производная . Как же ее вычислить?

Здесь математические вычисления будут намного сложнее. С первой попытки вникнуть будет непросто, но отчаиваться не стоит. Возьмите блокнот и ручку – лучше делать заметки, они помогут в будущем.

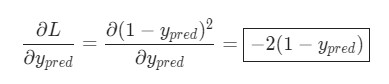

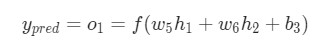

Для начала, давайте перепишем частную производную в контексте :

Подсчитать можно благодаря вычисленной выше

L = (1 - ypred)2:

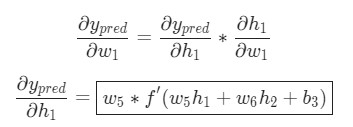

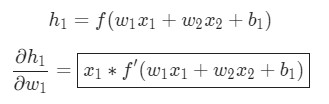

Теперь, давайте определим, что делать с . Как и ранее, позволим

h1, h2, o1 стать результатами вывода нейронов, которые они представляют. Дальнейшие вычисления:

f является функцией активации сигмоида.

Так как w1 влияет только на h1, а не на h2, можно записать:

Те же самые действия проводятся для :

В данном случае х1 — вес, а х2 — рост. Здесь f′(x) как производная функции сигмоида встречается во второй раз. Попробуем вывести ее:

Функция f'(x) в таком виде будет использована несколько позже.

Вот и все. Теперь разбита на несколько частей, которые будут оптимальны для подсчета:

Эта система подсчета частных производных при работе в обратном порядке известна, как метод обратного распространения ошибки, или backprop.

У нас накопилось довольно много формул, в которых легко запутаться. Для лучшего понимания принципа их работы рассмотрим следующий пример.

Пример подсчета частных производных

В данном примере также будет задействована только Alice:

| Имя/Name | Вес/Weight (минус 135) | Рост/Height (минус 66) | Пол/Gender |

| Alice | -2 | -1 | 1 |

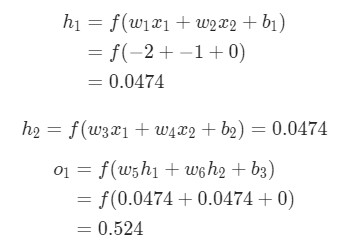

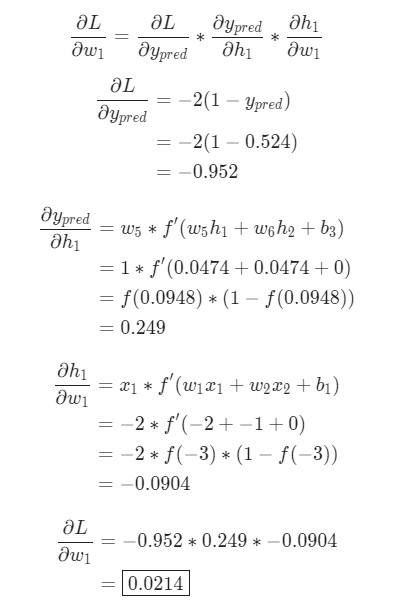

Здесь вес будет представлен как 1, а смещение как 0. Если выполним прямое распространение (feedforward) через сеть, получим:

Выдачи нейронной сети ypred = 0.524. Это дает нам слабое представление о том, рассматривается мужчина Male (0), или женщина Female (1). Давайте подсчитаем :

Напоминание: мы вывели

f '(x) = f (x) * (1 - f (x))ранее для нашей функции активации сигмоида.

У нас получилось! Результат говорит о том, что если мы собираемся увеличить w1, L немного увеличивается в результате.

Тренировка нейронной сети: Стохастический градиентный спуск

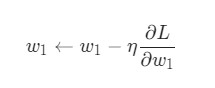

У нас есть все необходимые инструменты для тренировки нейронной сети. Мы используем алгоритм оптимизации под названием стохастический градиентный спуск (SGD), который говорит нам, как именно поменять вес и смещения для минимизации потерь. По сути, это отражается в следующем уравнении:

η является константой под названием оценка обучения, что контролирует скорость обучения. Все что мы делаем, так это вычитаем из

w1:

Если мы применим это на каждый вес и смещение в сети, потеря будет постепенно снижаться, а показатели сети сильно улучшатся.

Наш процесс тренировки будет выглядеть следующим образом:

- Выбираем один пункт из нашего набора данных. Это то, что делает его стохастическим градиентным спуском. Мы обрабатываем только один пункт за раз;

- Подсчитываем все частные производные потери по весу или смещению. Это может быть

,

и так далее;

- Используем уравнение обновления для обновления каждого веса и смещения;

- Возвращаемся к первому пункту.

Давайте посмотрим, как это работает на практике.

Создание нейронной сети с нуля на Python

Наконец, мы реализуем готовую нейронную сеть:

| Имя/Name | Вес/Weight (минус 135) | Рост/Height (минус 66) | Пол/Gender |

| Alice | -2 | -1 | 1 |

| Bob | 25 | 6 | 0 |

| Charlie | 17 | 4 | 0 |

| Diana | -15 | -6 | 1 |

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 88 89 90 91 92 93 94 95 96 97 98 99 100 101 102 103 104 105 106 107 108 109 110 111 112 113 114 115 116 117 118 119 120 121 122 123 124 125 126 127 128 129 130 131 132 133 134 135 136 137 |

import numpy as np def sigmoid(x): # Функция активации sigmoid:: f(x) = 1 / (1 + e^(-x)) return 1 / (1 + np.exp(—x)) def deriv_sigmoid(x): # Производная от sigmoid: f'(x) = f(x) * (1 — f(x)) fx = sigmoid(x) return fx * (1 — fx) def mse_loss(y_true, y_pred): # y_true и y_pred являются массивами numpy с одинаковой длиной return ((y_true — y_pred) ** 2).mean() class OurNeuralNetwork: «»» Нейронная сеть, у которой: — 2 входа — скрытый слой с двумя нейронами (h1, h2) — слой вывода с одним нейроном (o1) *** ВАЖНО ***: Код ниже написан как простой, образовательный. НЕ оптимальный. Настоящий код нейронной сети выглядит не так. НЕ ИСПОЛЬЗУЙТЕ этот код. Вместо этого, прочитайте/запустите его, чтобы понять, как работает эта сеть. «»» def __init__(self): # Вес self.w1 = np.random.normal() self.w2 = np.random.normal() self.w3 = np.random.normal() self.w4 = np.random.normal() self.w5 = np.random.normal() self.w6 = np.random.normal() # Смещения self.b1 = np.random.normal() self.b2 = np.random.normal() self.b3 = np.random.normal() def feedforward(self, x): # x является массивом numpy с двумя элементами h1 = sigmoid(self.w1 * x[0] + self.w2 * x[1] + self.b1) h2 = sigmoid(self.w3 * x[0] + self.w4 * x[1] + self.b2) o1 = sigmoid(self.w5 * h1 + self.w6 * h2 + self.b3) return o1 def train(self, data, all_y_trues): «»» — data is a (n x 2) numpy array, n = # of samples in the dataset. — all_y_trues is a numpy array with n elements. Elements in all_y_trues correspond to those in data. «»» learn_rate = 0.1 epochs = 1000 # количество циклов во всём наборе данных for epoch in range(epochs): for x, y_true in zip(data, all_y_trues): # — Выполняем обратную связь (нам понадобятся эти значения в дальнейшем) sum_h1 = self.w1 * x[0] + self.w2 * x[1] + self.b1 h1 = sigmoid(sum_h1) sum_h2 = self.w3 * x[0] + self.w4 * x[1] + self.b2 h2 = sigmoid(sum_h2) sum_o1 = self.w5 * h1 + self.w6 * h2 + self.b3 o1 = sigmoid(sum_o1) y_pred = o1 # — Подсчет частных производных # — Наименование: d_L_d_w1 представляет «частично L / частично w1» d_L_d_ypred = —2 * (y_true — y_pred) # Нейрон o1 d_ypred_d_w5 = h1 * deriv_sigmoid(sum_o1) d_ypred_d_w6 = h2 * deriv_sigmoid(sum_o1) d_ypred_d_b3 = deriv_sigmoid(sum_o1) d_ypred_d_h1 = self.w5 * deriv_sigmoid(sum_o1) d_ypred_d_h2 = self.w6 * deriv_sigmoid(sum_o1) # Нейрон h1 d_h1_d_w1 = x[0] * deriv_sigmoid(sum_h1) d_h1_d_w2 = x[1] * deriv_sigmoid(sum_h1) d_h1_d_b1 = deriv_sigmoid(sum_h1) # Нейрон h2 d_h2_d_w3 = x[0] * deriv_sigmoid(sum_h2) d_h2_d_w4 = x[1] * deriv_sigmoid(sum_h2) d_h2_d_b2 = deriv_sigmoid(sum_h2) # — Обновляем вес и смещения # Нейрон h1 self.w1 -= learn_rate * d_L_d_ypred * d_ypred_d_h1 * d_h1_d_w1 self.w2 -= learn_rate * d_L_d_ypred * d_ypred_d_h1 * d_h1_d_w2 self.b1 -= learn_rate * d_L_d_ypred * d_ypred_d_h1 * d_h1_d_b1 # Нейрон h2 self.w3 -= learn_rate * d_L_d_ypred * d_ypred_d_h2 * d_h2_d_w3 self.w4 -= learn_rate * d_L_d_ypred * d_ypred_d_h2 * d_h2_d_w4 self.b2 -= learn_rate * d_L_d_ypred * d_ypred_d_h2 * d_h2_d_b2 # Нейрон o1 self.w5 -= learn_rate * d_L_d_ypred * d_ypred_d_w5 self.w6 -= learn_rate * d_L_d_ypred * d_ypred_d_w6 self.b3 -= learn_rate * d_L_d_ypred * d_ypred_d_b3 # — Подсчитываем общую потерю в конце каждой фазы if epoch % 10 == 0: y_preds = np.apply_along_axis(self.feedforward, 1, data) loss = mse_loss(all_y_trues, y_preds) print(«Epoch %d loss: %.3f» % (epoch, loss)) # Определение набора данных data = np.array([ [—2, —1], # Alice [25, 6], # Bob [17, 4], # Charlie [—15, —6], # Diana ]) all_y_trues = np.array([ 1, # Alice 0, # Bob 0, # Charlie 1, # Diana ]) # Тренируем нашу нейронную сеть! network = OurNeuralNetwork() network.train(data, all_y_trues) |

Вы можете поэкспериментировать с этим кодом самостоятельно. Он также доступен на Github.

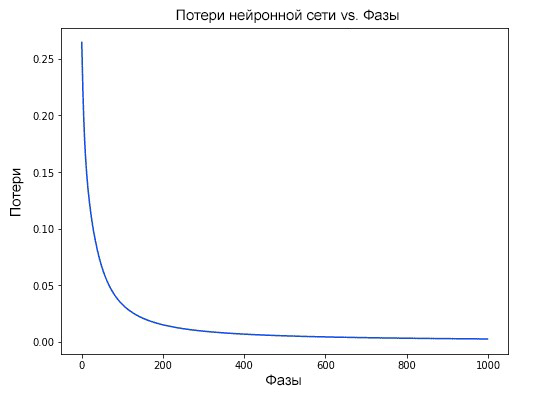

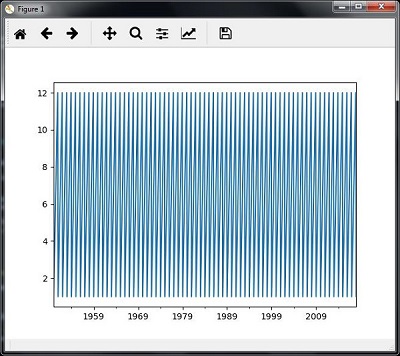

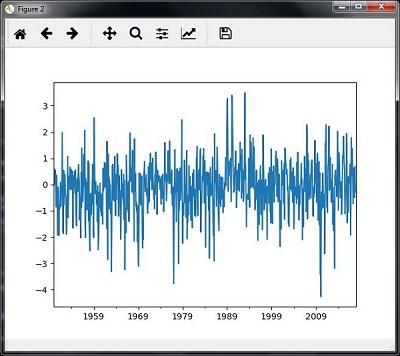

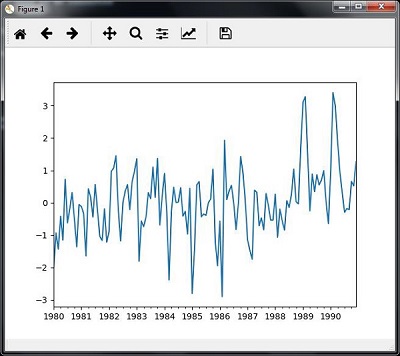

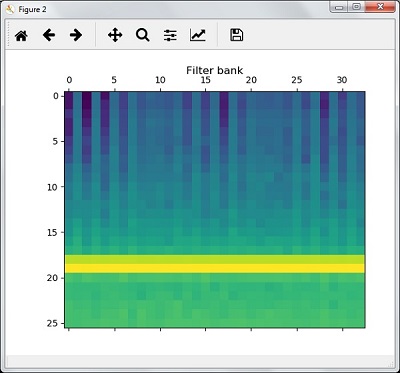

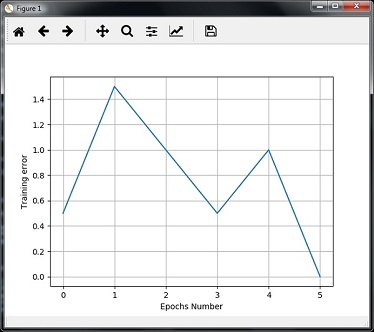

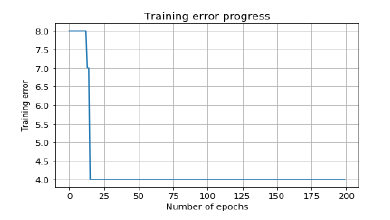

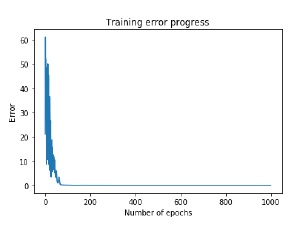

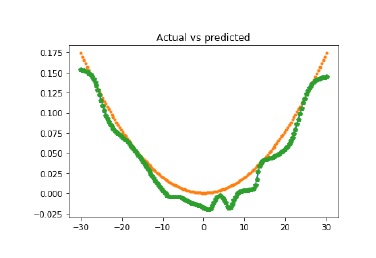

Наши потери постоянно уменьшаются по мере того, как учится нейронная сеть:

Теперь мы можем использовать нейронную сеть для предсказания полов:

|

# Делаем предсказания emily = np.array([—7, —3]) # 128 фунтов, 63 дюйма frank = np.array([20, 2]) # 155 фунтов, 68 дюймов print(«Emily: %.3f» % network.feedforward(emily)) # 0.951 — F print(«Frank: %.3f» % network.feedforward(frank)) # 0.039 — M |

Что теперь?

У вас все получилось. Вспомним, как мы это делали:

- Узнали, что такое нейроны, как создать блоки нейронных сетей;

- Использовали функцию активации сигмоида в отношении нейронов;

- Увидели, что по сути нейронные сети — это просто набор нейронов, связанных между собой;

- Создали набор данных с параметрами вес и рост в качестве входных данных (или функций), а также использовали пол в качестве вывода (или маркера);

- Узнали о функциях потерь и среднеквадратичной ошибке (MSE);

- Узнали, что тренировка нейронной сети — это минимизация ее потерь;

- Использовали обратное распространение для вычисления частных производных;

- Использовали стохастический градиентный спуск (SGD) для тренировки нейронной сети.

Подробнее о построении нейронной сети прямого распросранения Feedforward можно ознакомиться в одной из предыдущих публикаций.

Спасибо за внимание!

Являюсь администратором нескольких порталов по обучению языков программирования Python, Golang и Kotlin. В составе небольшой команды единомышленников, мы занимаемся популяризацией языков программирования на русскоязычную аудиторию. Большая часть статей была адаптирована нами на русский язык и распространяется бесплатно.

E-mail: vasile.buldumac@ati.utm.md

Образование

Universitatea Tehnică a Moldovei (utm.md)

- 2014 — 2018 Технический Университет Молдовы, ИТ-Инженер. Тема дипломной работы «Автоматизация покупки и продажи криптовалюты используя технический анализ»

- 2018 — 2020 Технический Университет Молдовы, Магистр, Магистерская диссертация «Идентификация человека в киберпространстве по фотографии лица»

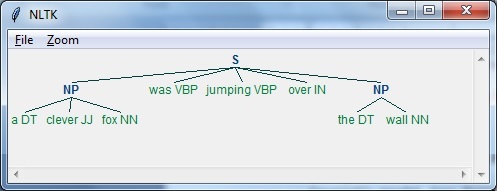

Введение

Создать программу искусственного интеллекта, которая воспринимает человеческую речь и отвечает на вопросы, не так сложно, как кажется. Такое чудо можно сотворить за один день с помощью нескольких пакетов Python и API.

Вот ответы бота на некоторые вопросы.

Я: КАКОВА ЧИСЛЕННОСТЬ НАСЕЛЕНИЯ БРАЗИЛИИ?

БОТ: ПО ОЦЕНКАМ, НАСЕЛЕНИЕ БРАЗИЛИИ СОСТАВЛЯЕТ БОЛЕЕ 209 МИЛЛИОНОВ ЧЕЛОВЕК.

Я: КАКОЕ НА ВКУС МОРОЖЕНОЕ?

БОТ: БОЛЬШИНСТВО ВИДОВ МОРОЖЕНОГО НА ВКУС СЛАДКИЕ И СЛИВОЧНЫЕ.

Я: КАК ПОЛЬЗОВАТЬСЯ ПАЛОЧКАМИ ДЛЯ ЕДЫ?

БОТ: ЧТОБЫ ИСПОЛЬЗОВАТЬ ПАЛОЧКИ ДЛЯ ЕДЫ, НУЖНО ДЕРЖАТЬ ПО ОДНОЙ В КАЖДОЙ РУКЕ. ПОМЕСТИТЕ ПАЛОЧКУ ДЛЯ ЕДЫ В ДОМИНИРУЮЩУЮ РУКУ МЕЖДУ БОЛЬШИМ И УКАЗАТЕЛЬНЫМ ПАЛЬЦАМИ И УДЕРЖИВАЙТЕ ЕЕ СРЕДНИМ ПАЛЬЦЕМ. ПОМЕСТИТЕ ПАЛОЧКУ ДЛЯ ЕДЫ В НЕДОМИНИРУЮЩУЮ РУКУ МЕЖДУ БОЛЬШИМ И УКАЗАТЕЛЬНЫМ ПАЛЬЦАМИ И УДЕРЖИВАЙТЕ ЕЕ БЕЗЫМЯННЫМ ПАЛЬЦЕМ И МИЗИНЦЕМ. ЧТОБЫ ВЗЯТЬ ЕДУ, ИСПОЛЬЗУЙТЕ ПАЛОЧКУ ДЛЯ ЕДЫ В ДОМИНИРУЮЩЕЙ РУКЕ, ЧТОБЫ УДЕРЖИВАТЬ ЕДУ, А ЗАТЕМ ИСПОЛЬЗУЙТЕ ЭТУ ПАЛОЧКУ ДЛЯ ЕДЫ.

Конечно, это не самые содержательные ответы. А концовка ответа про палочки для еды и вовсе довольно странная. Однако тот факт, что подобное приложение может интерпретировать речь и отвечать на вопросы, какими бы ограниченными ни казались ответы, довольно поразителен. К тому же мы можем посмотреть, как устроен виртуальный ассистент и поэкспериментировать с ним.

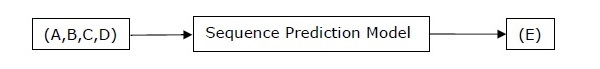

Что делает эта программа

- Файл запускается через командную строку, когда пользователь готов задать вопрос.

- PyAudio позволяет микрофону компьютера улавливать речевые данные.

- Аудиоданные хранятся в переменной под названием

stream, затем кодируются и преобразуются в JSON-данные. - JSON-данные поступают в API AssemblyAI для преобразования в текст, после чего текстовые данные отправляются обратно.

- Текстовые данные поступают в API OpenAI, а затем направляются в движок

text-davinci-002для обработки. - Ответ на вопрос извлекается и отображается на консоли под заданным вопросом.

API и высокоуровневый дизайн

В этом руководстве используются два базовых API:

- AssemblyAI для преобразования аудио в текст.

- OpenAI для интерпретации вопроса и получения ответа.

Дизайн (высокий уровень)

Этот проект содержит два файла: main и openai_helper.

Скрипт main используется в основном для API-соединения “голос-текст”. Он включает в себя настройку сервера WebSockets, заполнение всех параметров, необходимых для PyAudio, и создание асинхронных функций, необходимых для одновременной отправки и получения речевых данных между приложением и сервером AssemblyAI.

openai_helper — файл с коротким именем, используемый исключительно для подключения к OpenAI-движку text-davinci-002. Это соединение обеспечивает получение ответов на вопросы.

Разбор кода

main.py

Сначала импортируем все библиотеки, которые будут использованы приложением. Для некоторых из них может потребоваться Pip-установка (в зависимости от того, использовали ли вы их). Обратите внимание на комментарии к коду ниже:

#PyAudio предоставляет привязку к Python для PortAudio v19, кроссплатформенной библиотеки ввода-вывода аудио. Позволяет микрофону компьютера взаимодействовать с Python

import pyaudio

#Библиотека Python для создания сервера Websocket - двустороннего интерактивного сеанса связи между браузером пользователя и сервером

import websockets

#asyncio - это библиотека для написания параллельного кода с использованием синтаксиса async/await

import asyncio

#Этот модуль предоставляет функции для кодирования двоичных данных в печатаемые символы ASCII и декодирования таких кодировок обратно в двоичные данные

import base64

#В Python есть встроенный пакет json, который можно использовать для работы с данными JSON

import json

#"Подтягивание" функции из другого файла

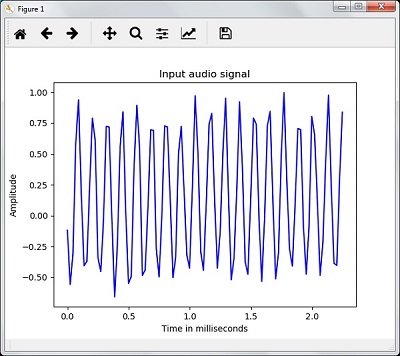

from openai_helper import ask_computerТеперь устанавливаем параметры PyAudio. Эти параметры являются настройками по умолчанию, найденными в интернете. Вы можете поэкспериментировать с ними по мере необходимости, но мне параметры по умолчанию подошли отлично. Устанавливаем переменную stream в качестве начального контейнера для аудиоданных, а затем выводим параметры устройства ввода по умолчанию в виде словаря. Ключи словаря отражают поля данных в структуре PortAudio. Вот код:

#Настройка параметров микрофона

#Сколько байт данных приходится на каждый обработанный фрагмент звука

FRAMES_PER_BUFFER = 3200

#Битовый целочисленный формат аудиовхода/выхода порта по умолчанию

FORMAT = pyaudio.paInt16

#Моноформатный канал (то есть нам нужен только входной аудиосигнал, поступающий с одного направления)

CHANNELS = 1

#Желаемая частота в Гц входящего аудиосигнала

RATE = 16000

p = pyaudio.PyAudio()

#Начинает запись, создает переменную stream, присваивает параметры

stream = p.open(

format=FORMAT,

channels=CHANNELS,

rate=RATE,

input=True,

frames_per_buffer=FRAMES_PER_BUFFER

)

print(p.get_default_input_device_info())Далее создаем несколько асинхронных функций для отправки и получения данных, необходимых для преобразования голосовых вопросов в текст. Эти функции выполняются параллельно, что позволяет преобразовывать речевые данные в формат base64, конвертировать их в JSON, отправлять на сервер через API, а затем получать обратно в читаемом формате. Сервер WebSockets также является важной частью приведенного ниже скрипта, поскольку именно он делает прямой поток бесшовным.

#Необходимая нам конечная точка AssemblyAI

URL = "wss://api.assemblyai.com/v2/realtime/ws?sample_rate=16000"

auth_key = "enter key here"#Создание асинхронной функции, чтобы она могла продолжать работать и отправлять поток речевых данных в API до тех пор, пока это необходимо

async def send_receive():

print(f'Connecting websocket to url ${URL}')

async with websockets.connect(

URL,

extra_headers=(("Authorization", auth_key),),

ping_interval=5,

ping_timeout=20

) as _ws:

await asyncio.sleep(0.1)

print("Receiving SessionBegins ...")

session_begins = await _ws.recv()

print(session_begins)

print("Sending messages ...")

async def send():

while True:

try:

data = stream.read(FRAMES_PER_BUFFER, exception_on_overflow=False)

data = base64.b64encode(data).decode("utf-8")

json_data = json.dumps({"audio_data":str(data)})

await _ws.send(json_data)

except websockets.exceptions.ConnectionClosedError as e:

print(e)

assert e.code == 4008

break

except Exception as e:

assert False, "Not a websocket 4008 error"

await asyncio.sleep(0.01)

return True

async def receive():

while True:

try:

result_str = await _ws.recv()

result = json.loads(result_str)

prompt = result['text'] if prompt and result['message_type'] == 'FinalTranscript':

print("Me:", prompt)

answer = ask_computer(prompt)

print("Bot", answer)

except websockets.exceptions.ConnectionClosedError as e:

print(e)

assert e.code == 4008

break

except Exception as e:

assert False, "Not a websocket 4008 error"

send_result, receive_result = await asyncio.gather(send(), receive())

asyncio.run(send_receive())

Теперь у нас есть простое API-соединение с OpenAI. Если вы посмотрите на строку 44 приведенного выше кода (main3.py), то увидите, что мы извлекаем функцию ask_computer из этого другого файла и используем ее результаты в качестве ответов на вопросы.

Заключение

Это отличный проект для всех, кто не прочь взять на вооружение ту самую технологию, благодаря которой функционируют Siri и Alexa. Его реализация не требует большого опыта в программировании, потому что для обработки данных используется API.

Весь код хранится в этом репозитории.

Источник

Просмотры: 1 645

В продолжении предыдущей статьи мы займемся разработкой более сложного искусственного интеллекта, что будет различать фото кошек и собак.

В прошлой статье мы рассмотрели базовые концепции нейронной сети. На этот раз мы создадим куда более сложный проект, что будет распознавать пользовательские картинки. Нейронная сеть будет понимать: находится ли на фото изображение кота или же изображение собачки.

Что будет в нашей программе?

Наш искусственный интеллект не будет распознавать все объекты, по типу: машин, других животных, людей и тому прочее. Не будет он это делать по одной причине. Мы в качестве датасета или же, другими словами, набора данных для тренировки – будем использовать датасет от компании Microsoft. В датасете у них собрано более 25 000 фотографий котов и собачек, что даст нам возможность натренировать правильные весы для распознавания наших собственных фото.

Мы не будем сами искать варианты для обучения нейронной сети и на это есть два фактора:

- В этом нет смысла, так как для подобных задач, зачастую, уже есть различные датасеты с подготовленными данными, которые можно использовать.

- У нас уйдет слишком много времени и сил на тренировку своей нейронной сети. Только представьте, нам потребуется найти и произвести обучение на тысячах фотографиях. Это не только очень сложно, но и дорого.

По этой причине для нашей программы мы воспользуемся готовым набором данных.

Какие библиотеки нам потребуются?

В прошлой статье мы использовали лишь одну библиотеку – numpy. Без этой библиотеки нам не обойтись и в этот раз.

Numpy – библиотека, что позволяет поддерживать множество функций для работы с массивами, а также содержит поддержку высокоуровневых математических функций, предназначенных для работы с многомерными массивами.

По причине того, что нейронные сети – это математика, массивы и наборы данных, то без numpy – не обойтись.

Также мы будем использовать библиотеку Tensorflow. Она создана компанией Google и служит для решения задач построения и тренировки нейронной сети. За счет неё процесс обучение нейронки немного проще, нежели при написании с использованием только

numpy

.

Ну и последняя, но не менее важная – библиотека Matplotlib. Она служит для визуализации данных двумерной графикой. На её основе можно построить графики, изображения и прочие визуальные данные, которые человеком воспринимаются гораздо проще и лучше, нежели нули и единицы.

Среда разработки

В качестве среды разработки мы будем использовать специальный сервис от Google — Colab. Colab позволяет любому писать и выполнять произвольный код Python через браузер и особенно хорошо подходит для машинного обучения, анализа данных и обучения.

Colab полностью бесплатен и позволяет выполнять код блоками. К примеру, мы можем выполнить блок кода, где у нас идет обучение нейронки, а далее мы можем всегда выполнять не всю программу с начала и до конца, а лишь тот участок кода, где мы указываем новые данные для тестирования уже обученной нейронки.

Такой принцип существенно экономит время и по этой причине мы и будем использовать сервис Google Colab.

Создание проекта

Полная разработка проекта показывается в видео. Вы можете просмотреть его ниже:

Полезные ссылки:

- Сервис Google Colab;

- Распознавание объектов на видео.

Код для реализации проекта из видео:

# Импорт библиотек и классов

import numpy as np

import tensorflow as tf

import tensorflow_datasets as tfds

from tensorflow.keras.preprocessing.image import load_img, img_to_array

from tensorflow.keras.layers import Dense, GlobalAveragePooling2D, Dropout

import matplotlib.pyplot as plt

from google.colab import files

# Подгрузка датасета от Microsoft

train, _ = tfds.load('cats_vs_dogs', split=['train[:100%]'], with_info=True, as_supervised=True)

# Функция для изменения размеров изображений

SIZE = (224, 224)

def resize_image(img, label):

img = tf.cast(img, tf.float32)

img = tf.image.resize(img, SIZE)

img /= 255.0

return img, label

# Уменьшаем размеры всех изображений, полученных из датасета

train_resized = train[0].map(resize_image)

train_batches = train_resized.shuffle(1000).batch(16)

# Создание основного слоя для создания модели

base_layers = tf.keras.applications.MobileNetV2(input_shape=(SIZE[0], SIZE[1], 3), include_top=False)

# Создание модели нейронной сети

model = tf.keras.Sequential([

base_layers,

GlobalAveragePooling2D(),

Dropout(0.2),

Dense(1)

])

model.compile(optimizer='adam', loss=tf.keras.losses.BinaryCrossentropy(from_logits=True), metrics=['accuracy'])

# Обучение нейронной сети (наши картинки, одна итерация обучения)

model.fit(train_batches, epochs=1)

# Функция для подгрузки изображений

files.upload()

# Сюда укажите названия подгруженных изображений

images = []

# Перебираем все изображения и даем нейронке шанс определить что находиться на фото

for i in images:

img = load_img(i)

img_array = img_to_array(img)

img_resized, _ = resize_image(img_array, _)

img_expended = np.expand_dims(img_resized, axis=0)

prediction = model.predict(img_expended)

plt.figure()

plt.imshow(img)

label = 'Собачка' if prediction > 0 else 'Кошка'

plt.title('{}'.format(label))Изучение программирования

А вы хотите стать программистом и начать разрабатывать самостоятельно ИИ или хотя бы использовать уже готовые для своих собственных проектов? Предлагаем нашу программу обучения по языку Python. В ходе программы вы научитесь работать с языком, изучите построение мобильных проектов, научитесь создавать полноценные веб сайты на основе фреймворка Джанго, а также в курсе будет модуль по изучению нескольких готовых библиотек для искусственного интеллекта.

С помощью статьи PhD Оксфордского университета и автора книг о глубоком обучении Эндрю Траска показываем, как написать простую нейронную сеть на Python. Она умещается всего в девять строчек кода и выглядит вот так:

from numpy import exp, array, random, dot

training_set_inputs = array([[0, 0, 1], [1, 1, 1], [1, 0, 1], [0, 1, 1]])

training_set_outputs = array([[0, 1, 1, 0]]).T

random.seed(1)

synaptic_weights = 2 * random.random((3, 1)) — 1

for iteration in xrange(10000):

output = 1 / (1 + exp(-(dot(training_set_inputs, synaptic_weights))))

synaptic_weights += dot(training_set_inputs.T, (training_set_outputs — output) * output * (1 — output))

print 1 / (1 + exp(-(dot(array([1, 0, 0]), synaptic_weights))))

Чуть ниже объясним как получается этот код и какой дополнительный код нужен к нему, чтобы нейросеть работала. Но сначала небольшое отступление о нейросетях и их устройстве.

Человеческий мозг состоит из ста миллиардов клеток, которые называются нейронами. Они соединены между собой синапсами. Если через синапсы к нейрону придет достаточное количество нервных импульсов, этот нейрон сработает и передаст нервный импульс дальше. Этот процесс лежит в основе нашего мышления.

Мы можем смоделировать это явление, создав нейронную сеть с помощью компьютера. Нам не нужно воссоздавать все сложные биологические процессы, которые происходят в человеческом мозге на молекулярном уровне, нам достаточно знать, что происходит на более высоких уровнях.

Для этого мы используем математический инструмент — матрицы, которые представляют собой таблицы чисел. Чтобы сделать все как можно проще, мы смоделируем только один нейрон, к которому поступает входная информация из трех источников и есть только один выход (рис. 1). Наша задача — научить нейронную сеть решать задачу, которая изображена на рисунке ниже. Первые четыре примера будут нашим тренировочным набором. Получилось ли у вас увидеть закономерность? Что должно быть на месте вопросительного знака — 0 или 1?

Вы могли заметить, что вывод всегда равен значению левого столбца. Так что ответом будет 1.

Но как научить наш нейрон правильно отвечать на заданный вопрос? Для этого мы зададим каждому входящему сигналу вес, который может быть положительным или отрицательным числом. Если на входе будет сигнал с большим положительным весом или отрицательным весом, то это сильно повлияет на решение нейрона, которое он подаст на выход. Прежде чем мы начнем обучение модели, зададим для каждого примера случайное число в качестве веса. После этого мы можем приняться за тренировочный процесс, который будет выглядеть следующим образом:

- В качестве входных данных мы возьмем примеры из тренировочного набора. Потом мы воспользуемся специальной формулой для расчета выхода нейрона, которая будет учитывать случайные веса, которые мы задали для каждого примера.

- Далее посчитаем размер ошибки, который вычисляется как разница между числом, которое нейрон подал на выход и желаемым числом из примера.

- В зависимости от того, в какую сторону нейрон ошибся, мы немного отрегулируем вес этого примера.

- Повторим этот процесс 10 000 раз.

В какой-то момент веса достигнут оптимальных значений для тренировочного набора. Если после этого нейрону будет дана новая задача, которая следует такой же закономерности, он должен дать верный ответ.

Итак, что же из себя представляет формула, которая рассчитывает значение выхода нейрона? Для начала мы возьмем взвешенную сумму входных сигналов:

После этого мы нормализуем это выражение, чтобы результат был между 0 и 1. Для этого, в этом примере, я использую математическую функцию, которая называется сигмоидой:

Если мы нарисуем график этой функции, то он будет выглядеть как кривая в форме буквы S (рис. 4).

Подставив первое уравнения во второе, мы получим итоговую формулу выхода нейрона.

Вы можете заметить, что для простоты мы не задаем никаких ограничений на входящие данные, предполагая, что входящий сигнал всегда достаточен для того, чтобы наш нейрон подал сигнал на выход.

Во время тренировочного цикла (он изображен на рисунке 3) мы постоянно корректируем веса. Но на сколько? Для того, чтобы вычислить это, мы воспользуемся следующей формулой:

Давайте поймем почему формула имеет такой вид. Сначала нам нужно учесть то, что мы хотим скорректировать вес пропорционально размеру ошибки. Далее ошибка умножается на значение, поданное на вход нейрона, что, в нашем случае, 0 или 1. Если на вход был подан 0, то вес не корректируется. И в конце выражение умножается на градиент сигмоиды. Разберемся в последнем шаге по порядку:

- Мы использовали сигмоиду для того, чтобы посчитать выход нейрона.

- Если на выходе мы получаем большое положительное или отрицательное число, то это значит, что нейрон был весьма уверен в том или ином решении.

- На рисунке 4 мы можем увидеть, что при больших значениях переменной градиент принимает маленькие значения.

- Если нейрон уверен в том, что заданный вес верен, то мы не хотим сильно корректировать его. Умножение на градиент сигмоиды позволяет добиться такого эффекта.

Градиент сигмоиды может быть найден по следующей формуле:

Таким образом, подставляя второе уравнение в первое, конечная формула для корректировки весов будет выглядеть следующим образом:

Существуют и другие формулы, которые позволяют нейрону обучаться быстрее, но преимущество этой формулы в том, что она достаточно проста для понимания.

Хотя мы не будем использовать специальные библиотеки для нейронных сетей, мы импортируем следующие 4 метода из математической библиотеки numpy:

- exp — функция экспоненты

- array — метод создания матриц

- dot — метод перемножения матриц

- random — метод, подающий на выход случайное число

Теперь мы можем, например, представить наш тренировочный набор с использованием array():

training_set_inputs = array([[0, 0, 1], [1, 1, 1], [1, 0, 1], [0, 1, 1]])=

training_set_outputs = array([[0, 1, 1, 0]]).T

Функция .T транспонирует матрицу из горизонтальной в вертикальную. В результате компьютер хранит эти числа таким образом:

Теперь мы готовы к более изящной версии кода. После нее добавим несколько финальных замечаний.

Обратите внимание, что на каждой итерации мы обрабатываем весь тренировочный набор одновременно. Таким образом наши переменные все являются матрицами.

Итак, вот полноценно работающий пример нейронной сети, написанный на Python:

from numpy import exp, array, random, dot

class NeuralNetwork():

def __init__(self):

Задаем порождающий элемент для генератора случайных чисел, чтобы он генерировал одинаковые числа при каждом запуске программы

random.seed(1)

Мы моделируем единственный нейрон с тремя входящими связями и одним выходом. Мы задаем случайные веса в матрице размера 3 x 1, где значения весов варьируются от -1 до 1, а среднее значение равно 0.

self.synaptic_weights = 2 * random.random((3, 1)) — 1

Функция сигмоиды, график которой имеет форму буквы S.

Мы используем эту функцию, чтобы нормализовать взвешенную сумму входных сигналов.

def __sigmoid(self, x):

return 1 / (1 + exp(-x))

Производная от функции сигмоиды. Это градиент ее кривой. Его значение указывает насколько нейронная сеть уверена в правильности существующего веса.

def __sigmoid_derivative(self, x):

return x * (1 — x)

Мы тренируем нейронную сеть методом проб и ошибок, каждый раз корректируя вес синапсов.

def train(self, training_set_inputs, training_set_outputs, number_of_training_iterations):

for iteration in xrange(number_of_training_iterations):

Тренировочный набор передается нейронной сети (одному нейрону в нашем случае).

output = self.think(training_set_inputs)

Вычисляем ошибку (разницу между желаемым выходом и выходом, предсказанным нейроном).

error = training_set_outputs — output

Умножаем ошибку на входной сигнал и на градиент сигмоиды. В результате этого, те веса, в которых нейрон не уверен, будут откорректированы сильнее. Входные сигналы, которые равны нулю, не приводят к изменению веса.

adjustment = dot(training_set_inputs.T, error * self.__sigmoid_derivative(output))

Корректируем веса.

self.synaptic_weights += adjustment

Заставляем наш нейрон подумать.

def think(self, inputs):

Пропускаем входящие данные через нейрон.

return self.__sigmoid(dot(inputs, self.synaptic_weights))

if __name__ == «__main__»:

Инициализируем нейронную сеть, состоящую из одного нейрона.

neural_network = NeuralNetwork()

print «Random starting synaptic weights:

» print neural_network.synaptic_weights

Тренировочный набор для обучения. У нас это 4 примера, состоящих из 3 входящих значений и 1 выходящего значения.

training_set_inputs = array([[0, 0, 1], [1, 1, 1], [1, 0, 1], [0, 1, 1]])

training_set_outputs = array([[0, 1, 1, 0]]).T

Обучаем нейронную сеть на тренировочном наборе, повторяя процесс 10000 раз, каждый раз корректируя веса.

neural_network.train(training_set_inputs, training_set_outputs, 10000)

print «New synaptic weights after training:

» print neural_network.synaptic_weights

Тестируем нейрон на новом примере.

print «Considering new situation [1, 0, 0] -> ?:

» print neural_network.think(array([1, 0, 0]))

Этот код также можно найти на GitHub. Обратите внимание, что если вы используете Python 3, то вам будет нужно заменить команду “xrange” на “range”.

Попробуйте теперь запустить нейронную сеть, используя в терминале эту команду:

python main.py

Результат должен быть таким:

Random starting synaptic weights:

[[-0.16595599]

[ 0.44064899]

[-0.99977125]]

New synaptic weights after training:

[[ 9.67299303]

[-0.2078435 ]

[-4.62963669]]

Considering new situation

[1, 0, 0] -> ?: [ 0.99993704]

Ура, мы построили простую нейронную сеть с помощью Python!

Сначала нейронная сеть задала себе случайные веса, затем обучилась на тренировочном наборе. После этого она предсказала в качестве ответа 0.99993704 для нового примера [1, 0, 0]. Верный ответ был 1, так что это очень близко к правде!

Традиционные компьютерные программы обычно не способны обучаться. И это то, что делает нейронные сети таким поразительным инструментом: они способны учиться, адаптироваться и реагировать на новые обстоятельства. Точно так же, как и человеческий мозг.

Конечно, мы создали модель всего лишь одного нейрона для решения очень простой задачи. Но что если мы соединим миллионы нейронов? Сможем ли мы таким образом однажды воссоздать реальное сознание?

18 февраля 2022 Python

В этом руководстве разберем создание голосового бота использующего технологии нейронных сетей на языке Python. Бот может распознавать человеческий голос в реальном времени с вашего устройства, например с микрофона ноутбука, и произносить осознанные ответы, которые обрабатывает нейронная сеть.

Бот состоит из двух основных частей: это часть обрабатывающая словарь и часть с голосовым ассистентом.

Всю разработку по написанию бота вы можете вести в IDE PyCharm, скачать можно с официального сайта JetBrains.

Все необходимые библиотеки можно установить с помощью PyPI прямо в консоле PyCharm. Команды для установки вы можете найти на официальном сайте в разделе нужной библиотеки.

Проблема возникла только с библиотекой PyAudio в Windows. Помогло следующее решение:

pip install pipwin pipwin install pyaudio

Дата-сет

Дата-сет — это набор данных для анализа. В нашем случае это будет некий текстовый файл содержащий строки в виде вопросответ.

Все строки текста перебираются с помощью функции for, при этом из текста удаляются все ненужные символы по маске, находящейся в переменной alphabet. Каждое значение строки раздельно заносится в массив dataset.

После обработки текста все его значения преобразуются в вектора с помощью библиотеки для машинного обучения Scikit-learn. В этом примере используется функция CountVectorizer(). Далее всем векторам присваивается класс с помощью классификатора LogisticRegression().

Когда приходит сообщение от пользователя оно так же преобразуется в вектор, и далее нейросеть пытается найти похожий вектор в датасете соответствующий какому-то вопросу, когда вектор найден, мы получим ответ.

Голосовой ассистент

Для распознавания голоса и озвучивания ответов бота, используется библиотека SpeechRecognition. Система ждет в бесконечном цикле, когда придет вопрос, в нашем случае голос с микрофона, после чего преобразует его в текст и отправляет на обработку в нейросеть. После получения текстового ответа он преобразуется в речь, запись сохраняется в папке с проектом и удаляется после воспроизведения. Вот так все просто! Для удобства все сообщения дублируются текстом в консоль.

При дефолтных настройках время ответа было достаточно долгим, иногда нужно было ждать по 15-30 сек. К тому же вопрос принимался от малейшего шума. Помогли следующие настройки:

voice_recognizer.dynamic_energy_threshold = False voice_recognizer.energy_threshold = 1000 voice_recognizer.pause_threshold = 0.5

И timeout = None, phrase_time_limit = 2 в функции listen()

После чего бот стал отвечать с минимальной задержкой.

Возможно вам подойдут другие значения. Описание этих и других настроек вы можете посмотреть все на том же сайте PyPI в разделе библиотеки SpeechRecognition. Но настройку phrase_time_limit я там почему-то не нашел, наткнулся на нее случайно в Stack Overflow.

Текст дата-сета

Это небольшой пример текста. Конечно же вопросов и ответов должно быть гораздо больше.

приветпривет как делавсё прекрасно как деласпасибо отлично кто тыя бот что делаешьс тобой разговариваю

Код Python

import speech_recognition as sr

from gtts import gTTS

import playsound

import os

import random

from sklearn.feature_extraction.text import CountVectorizer

from sklearn.linear_model import LogisticRegression

# Словарь

def clean_str(r):

r = r.lower()

r = [c for c in r if c in alphabet]

return ''.join(r)

alphabet = ' 1234567890-йцукенгшщзхъфывапролджэячсмитьбюёqwertyuiopasdfghjklzxcvbnm'

with open('dialogues.txt', encoding='utf-8') as f:

content = f.read()

blocks = content.split('n')

dataset = []

for block in blocks:

replicas = block.split('\')[:2]

if len(replicas) == 2:

pair = [clean_str(replicas[0]), clean_str(replicas[1])]

if pair[0] and pair[1]:

dataset.append(pair)

X_text = []

y = []

for question, answer in dataset[:10000]:

X_text.append(question)

y += [answer]

vectorizer = CountVectorizer()

X = vectorizer.fit_transform(X_text)

clf = LogisticRegression()

clf.fit(X, y)

def get_generative_replica(text):

text_vector = vectorizer.transform([text]).toarray()[0]

question = clf.predict([text_vector])[0]

return question

# Голосовой ассистент

def listen():

voice_recognizer = sr.Recognizer()

voice_recognizer.dynamic_energy_threshold = False

voice_recognizer.energy_threshold = 1000

voice_recognizer.pause_threshold = 0.5

with sr.Microphone() as source:

print("Говорите 🎤")

audio = voice_recognizer.listen(source, timeout = None, phrase_time_limit = 2)

try:

voice_text = voice_recognizer.recognize_google(audio, language="ru")

print(f"Вы сказали: {voice_text}")

return voice_text

except sr.UnknownValueError:

return "Ошибка распознания"

except sr.RequestError:

return "Ошибка соединения"

def say(text):

voice = gTTS(text, lang="ru")

unique_file = "audio_" + str(random.randint(0, 10000)) + ".mp3"

voice.save(unique_file)

playsound.playsound(unique_file)

os.remove(unique_file)

print(f"Бот: {text}")

def handle_command(command):

command = command.lower()

reply = get_generative_replica(command)

say(reply)

def stop():

say("Пока")

def start():

print(f"Запуск бота...")

while True:

command = listen()

handle_command(command)

try:

start()

except KeyboardInterrupt:

stop()

Пример одного из самых популярных голосовых помощников — это яндекс алиса.

AI with Python – Primer Concept

Since the invention of computers or machines, their capability to perform various tasks has experienced an exponential growth. Humans have developed the power of computer systems in terms of their diverse working domains, their increasing speed, and reducing size with respect to time.

A branch of Computer Science named Artificial Intelligence pursues creating the computers or machines as intelligent as human beings.

Basic Concept of Artificial Intelligence (AI)

According to the father of Artificial Intelligence, John McCarthy, it is “The science and engineering of making intelligent machines, especially intelligent computer programs”.

Artificial Intelligence is a way of making a computer, a computer-controlled robot, or a software think intelligently, in the similar manner the intelligent humans think. AI is accomplished by studying how human brain thinks and how humans learn, decide, and work while trying to solve a problem, and then using the outcomes of this study as a basis of developing intelligent software and systems.

While exploiting the power of the computer systems, the curiosity of human, lead him to wonder, “Can a machine think and behave like humans do?”

Thus, the development of AI started with the intention of creating similar intelligence in machines that we find and regard high in humans.

The Necessity of Learning AI

As we know that AI pursues creating the machines as intelligent as human beings. There are numerous reasons for us to study AI. The reasons are as follows −

AI can learn through data

In our daily life, we deal with huge amount of data and human brain cannot keep track of so much data. That is why we need to automate the things. For doing automation, we need to study AI because it can learn from data and can do the repetitive tasks with accuracy and without tiredness.

AI can teach itself

It is very necessary that a system should teach itself because the data itself keeps changing and the knowledge which is derived from such data must be updated constantly. We can use AI to fulfill this purpose because an AI enabled system can teach itself.

AI can respond in real time

Artificial intelligence with the help of neural networks can analyze the data more deeply. Due to this capability, AI can think and respond to the situations which are based on the conditions in real time.

AI achieves accuracy

With the help of deep neural networks, AI can achieve tremendous accuracy. AI helps in the field of medicine to diagnose diseases such as cancer from the MRIs of patients.

AI can organize data to get most out of it

The data is an intellectual property for the systems which are using self-learning algorithms. We need AI to index and organize the data in a way that it always gives the best results.

Understanding Intelligence

With AI, smart systems can be built. We need to understand the concept of intelligence so that our brain can construct another intelligence system like itself.

What is Intelligence?

The ability of a system to calculate, reason, perceive relationships and analogies, learn from experience, store and retrieve information from memory, solve problems, comprehend complex ideas, use natural language fluently, classify, generalize, and adapt new situations.

Types of Intelligence

As described by Howard Gardner, an American developmental psychologist, Intelligence comes in multifold −

| Sr.No | Intelligence & Description | Example |

|---|---|---|

| 1 |

Linguistic intelligence The ability to speak, recognize, and use mechanisms of phonology (speech sounds), syntax (grammar), and semantics (meaning). |

Narrators, Orators |

| 2 |

Musical intelligence The ability to create, communicate with, and understand meanings made of sound, understanding of pitch, rhythm. |

Musicians, Singers, Composers |

| 3 |

Logical-mathematical intelligence The ability to use and understand relationships in the absence of action or objects. It is also the ability to understand complex and abstract ideas. |

Mathematicians, Scientists |

| 4 |

Spatial intelligence The ability to perceive visual or spatial information, change it, and re-create visual images without reference to the objects, construct 3D images, and to move and rotate them. |

Map readers, Astronauts, Physicists |

| 5 |

Bodily-Kinesthetic intelligence The ability to use complete or part of the body to solve problems or fashion products, control over fine and coarse motor skills, and manipulate the objects. |

Players, Dancers |

| 6 |

Intra-personal intelligence The ability to distinguish among one’s own feelings, intentions, and motivations. |

Gautam Buddhha |

| 7 |

Interpersonal intelligence The ability to recognize and make distinctions among other people’s feelings, beliefs, and intentions. |

Mass Communicators, Interviewers |

You can say a machine or a system is artificially intelligent when it is equipped with at least one or all intelligences in it.

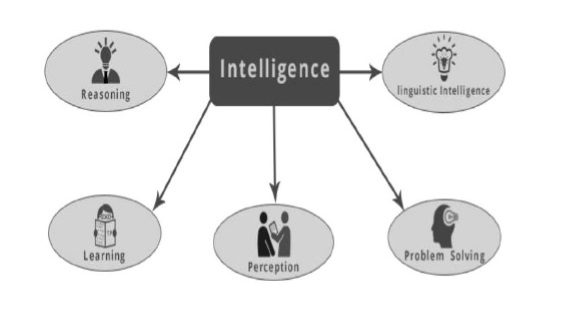

What is Intelligence Composed Of?

The intelligence is intangible. It is composed of −

- Reasoning

- Learning

- Problem Solving

- Perception

- Linguistic Intelligence

Let us go through all the components briefly −

Reasoning

It is the set of processes that enable us to provide basis for judgement, making decisions, and prediction. There are broadly two types −

| Inductive Reasoning | Deductive Reasoning |

|---|---|

| It conducts specific observations to makes broad general statements. | It starts with a general statement and examines the possibilities to reach a specific, logical conclusion. |

| Even if all of the premises are true in a statement, inductive reasoning allows for the conclusion to be false. | If something is true of a class of things in general, it is also true for all members of that class. |

| Example − «Nita is a teacher. Nita is studious. Therefore, All teachers are studious.» | Example − «All women of age above 60 years are grandmothers. Shalini is 65 years. Therefore, Shalini is a grandmother.» |

Learning − l

The ability of learning is possessed by humans, particular species of animals, and AI-enabled systems. Learning is categorized as follows −

Auditory Learning

It is learning by listening and hearing. For example, students listening to recorded audio lectures.

Episodic Learning

To learn by remembering sequences of events that one has witnessed or experienced. This is linear and orderly.

Motor Learning

It is learning by precise movement of muscles. For example, picking objects, writing, etc.

Observational Learning

To learn by watching and imitating others. For example, child tries to learn by mimicking her parent.

Perceptual Learning

It is learning to recognize stimuli that one has seen before. For example, identifying and classifying objects and situations.

Relational Learning

It involves learning to differentiate among various stimuli on the basis of relational properties, rather than absolute properties. For Example, Adding ‘little less’ salt at the time of cooking potatoes that came up salty last time, when cooked with adding say a tablespoon of salt.

-

Spatial Learning − It is learning through visual stimuli such as images, colors, maps, etc. For example, A person can create roadmap in mind before actually following the road.

-

Stimulus-Response Learning − It is learning to perform a particular behavior when a certain stimulus is present. For example, a dog raises its ear on hearing doorbell.

Problem Solving

It is the process in which one perceives and tries to arrive at a desired solution from a present situation by taking some path, which is blocked by known or unknown hurdles.

Problem solving also includes decision making, which is the process of selecting the best suitable alternative out of multiple alternatives to reach the desired goal.

Perception

It is the process of acquiring, interpreting, selecting, and organizing sensory information.

Perception presumes sensing. In humans, perception is aided by sensory organs. In the domain of AI, perception mechanism puts the data acquired by the sensors together in a meaningful manner.

Linguistic Intelligence

It is one’s ability to use, comprehend, speak, and write the verbal and written language. It is important in interpersonal communication.

What’s Involved in AI

Artificial intelligence is a vast area of study. This field of study helps in finding solutions to real world problems.

Let us now see the different fields of study within AI −

Machine Learning

It is one of the most popular fields of AI. The basic concept of this filed is to make the machine learning from data as the human beings can learn from his/her experience. It contains learning models on the basis of which the predictions can be made on unknown data.

Logic

It is another important field of study in which mathematical logic is used to execute the computer programs. It contains rules and facts to perform pattern matching, semantic analysis, etc.

Searching

This field of study is basically used in games like chess, tic-tac-toe. Search algorithms give the optimal solution after searching the whole search space.

Artificial neural networks

This is a network of efficient computing systems the central theme of which is borrowed from the analogy of biological neural networks. ANN can be used in robotics, speech recognition, speech processing, etc.

Genetic Algorithm

Genetic algorithms help in solving problems with the assistance of more than one program. The result would be based on selecting the fittest.

Knowledge Representation

It is the field of study with the help of which we can represent the facts in a way the machine that is understandable to the machine. The more efficiently knowledge is represented; the more system would be intelligent.

Application of AI

In this section, we will see the different fields supported by AI −

Gaming

AI plays crucial role in strategic games such as chess, poker, tic-tac-toe, etc., where machine can think of large number of possible positions based on heuristic knowledge.

Natural Language Processing

It is possible to interact with the computer that understands natural language spoken by humans.

Expert Systems

There are some applications which integrate machine, software, and special information to impart reasoning and advising. They provide explanation and advice to the users.

Vision Systems

These systems understand, interpret, and comprehend visual input on the computer. For example,

-

A spying aeroplane takes photographs, which are used to figure out spatial information or map of the areas.

-

Doctors use clinical expert system to diagnose the patient.

-

Police use computer software that can recognize the face of criminal with the stored portrait made by forensic artist.

Speech Recognition

Some intelligent systems are capable of hearing and comprehending the language in terms of sentences and their meanings while a human talks to it. It can handle different accents, slang words, noise in the background, change in human’s noise due to cold, etc.

Handwriting Recognition

The handwriting recognition software reads the text written on paper by a pen or on screen by a stylus. It can recognize the shapes of the letters and convert it into editable text.

Intelligent Robots

Robots are able to perform the tasks given by a human. They have sensors to detect physical data from the real world such as light, heat, temperature, movement, sound, bump, and pressure. They have efficient processors, multiple sensors and huge memory, to exhibit intelligence. In addition, they are capable of learning from their mistakes and they can adapt to the new environment.

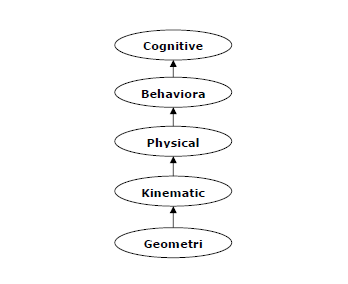

Cognitive Modeling: Simulating Human Thinking Procedure

Cognitive modeling is basically the field of study within computer science that deals with the study and simulating the thinking process of human beings. The main task of AI is to make machine think like human. The most important feature of human thinking process is problem solving. That is why more or less cognitive modeling tries to understand how humans can solve the problems. After that this model can be used for various AI applications such as machine learning, robotics, natural language processing, etc. Following is the diagram of different thinking levels of human brain −

Agent & Environment

In this section, we will focus on the agent and environment and how these help in Artificial Intelligence.

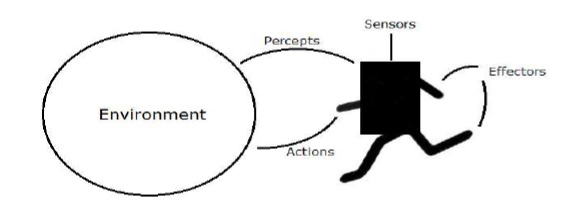

Agent

An agent is anything that can perceive its environment through sensors and acts upon that environment through effectors.

-

A human agent has sensory organs such as eyes, ears, nose, tongue and skin parallel to the sensors, and other organs such as hands, legs, mouth, for effectors.

-

A robotic agent replaces cameras and infrared range finders for the sensors, and various motors and actuators for effectors.

-

A software agent has encoded bit strings as its programs and actions.

Environment

Some programs operate in an entirely artificial environment confined to keyboard input, database, computer file systems and character output on a screen.

In contrast, some software agents (software robots or softbots) exist in rich, unlimited softbots domains. The simulator has a very detailed, complex environment. The software agent needs to choose from a long array of actions in real time. A softbot is designed to scan the online preferences of the customer and shows interesting items to the customer works in the real as well as an artificial environment.

AI with Python – Getting Started

In this chapter, we will learn how to get started with Python. We will also understand how Python helps for Artificial Intelligence.

Why Python for AI

Artificial intelligence is considered to be the trending technology of the future. Already there are a number of applications made on it. Due to this, many companies and researchers are taking interest in it. But the main question that arises here is that in which programming language can these AI applications be developed? There are various programming languages like Lisp, Prolog, C++, Java and Python, which can be used for developing applications of AI. Among them, Python programming language gains a huge popularity and the reasons are as follows −

Simple syntax & less coding

Python involves very less coding and simple syntax among other programming languages which can be used for developing AI applications. Due to this feature, the testing can be easier and we can focus more on programming.

Inbuilt libraries for AI projects

A major advantage for using Python for AI is that it comes with inbuilt libraries. Python has libraries for almost all kinds of AI projects. For example, NumPy, SciPy, matplotlib, nltk, SimpleAI are some the important inbuilt libraries of Python.

-

Open source − Python is an open source programming language. This makes it widely popular in the community.

-

Can be used for broad range of programming − Python can be used for a broad range of programming tasks like small shell script to enterprise web applications. This is another reason Python is suitable for AI projects.

Features of Python

Python is a high-level, interpreted, interactive and object-oriented scripting language. Python is designed to be highly readable. It uses English keywords frequently where as other languages use punctuation, and it has fewer syntactical constructions than other languages. Python’s features include the following −

-

Easy-to-learn − Python has few keywords, simple structure, and a clearly defined syntax. This allows the student to pick up the language quickly.

-

Easy-to-read − Python code is more clearly defined and visible to the eyes.

-

Easy-to-maintain − Python’s source code is fairly easy-to-maintain.

-

A broad standard library − Python’s bulk of the library is very portable and cross-platform compatible on UNIX, Windows, and Macintosh.

-

Interactive Mode − Python has support for an interactive mode which allows interactive testing and debugging of snippets of code.

-

Portable − Python can run on a wide variety of hardware platforms and has the same interface on all platforms.

-

Extendable − We can add low-level modules to the Python interpreter. These modules enable programmers to add to or customize their tools to be more efficient.

-

Databases − Python provides interfaces to all major commercial databases.

-

GUI Programming − Python supports GUI applications that can be created and ported to many system calls, libraries and windows systems, such as Windows MFC, Macintosh, and the X Window system of Unix.

-

Scalable − Python provides a better structure and support for large programs than shell scripting.

Important features of Python

Let us now consider the following important features of Python −

-

It supports functional and structured programming methods as well as OOP.

-

It can be used as a scripting language or can be compiled to byte-code for building large applications.

-

It provides very high-level dynamic data types and supports dynamic type checking.

-

It supports automatic garbage collection.

-

It can be easily integrated with C, C++, COM, ActiveX, CORBA, and Java.

Installing Python

Python distribution is available for a large number of platforms. You need to download only the binary code applicable for your platform and install Python.

If the binary code for your platform is not available, you need a C compiler to compile the source code manually. Compiling the source code offers more flexibility in terms of choice of features that you require in your installation.

Here is a quick overview of installing Python on various platforms −

Unix and Linux Installation

Follow these steps to install Python on Unix/Linux machine.

-

Open a Web browser and go to https://www.python.org/downloads

-

Follow the link to download zipped source code available for Unix/Linux.

-

Download and extract files.

-

Editing the Modules/Setup file if you want to customize some options.

-

run ./configure script

-

make

-

make install

This installs Python at the standard location /usr/local/bin and its libraries at /usr/local/lib/pythonXX where XX is the version of Python.

Windows Installation

Follow these steps to install Python on Windows machine.

-

Open a Web browser and go to https://www.python.org/downloads

-

Follow the link for the Windows installer python-XYZ.msi file where XYZ is the version you need to install.

-

To use this installer python-XYZ.msi, the Windows system must support Microsoft Installer 2.0. Save the installer file to your local machine and then run it to find out if your machine supports MSI.

-

Run the downloaded file. This brings up the Python install wizard, which is really easy to use. Just accept the default settings and wait until the install is finished.

Macintosh Installation

If you are on Mac OS X, it is recommended that you use Homebrew to install Python 3. It is a great package installer for Mac OS X and it is really easy to use. If you don’t have Homebrew, you can install it using the following command −

$ ruby -e "$(curl -fsSL https://raw.githubusercontent.com/Homebrew/install/master/install)"

We can update the package manager with the command below −

$ brew update

Now run the following command to install Python3 on your system −

$ brew install python3

Setting up PATH

Programs and other executable files can be in many directories, so operating systems provide a search path that lists the directories that the OS searches for executables.

The path is stored in an environment variable, which is a named string maintained by the operating system. This variable contains information available to the command shell and other programs.

The path variable is named as PATH in Unix or Path in Windows (Unix is case-sensitive; Windows is not).

In Mac OS, the installer handles the path details. To invoke the Python interpreter from any particular directory, you must add the Python directory to your path.

Setting Path at Unix/Linux

To add the Python directory to the path for a particular session in Unix −

-

In the csh shell

Type setenv PATH «$PATH:/usr/local/bin/python» and press Enter.

-

In the bash shell (Linux)

Type export ATH = «$PATH:/usr/local/bin/python» and press Enter.

-

In the sh or ksh shell

Type PATH = «$PATH:/usr/local/bin/python» and press Enter.

Note − /usr/local/bin/python is the path of the Python directory.

Setting Path at Windows

To add the Python directory to the path for a particular session in Windows −

-

At the command prompt − type path %path%;C:Python and press Enter.

Note − C:Python is the path of the Python directory.

Running Python

Let us now see the different ways to run Python. The ways are described below −

Interactive Interpreter

We can start Python from Unix, DOS, or any other system that provides you a command-line interpreter or shell window.

-

Enter python at the command line.

-

Start coding right away in the interactive interpreter.

$python # Unix/Linux

or

python% # Unix/Linux

or

C:> python # Windows/DOS

Here is the list of all the available command line options −

| S.No. | Option & Description |

|---|---|

| 1 |

-d It provides debug output. |

| 2 |

-o It generates optimized bytecode (resulting in .pyo files). |

| 3 |

-S Do not run import site to look for Python paths on startup. |

| 4 |

-v Verbose output (detailed trace on import statements). |

| 5 |

-x Disables class-based built-in exceptions (just use strings); obsolete starting with version 1.6. |

| 6 |

-c cmd Runs Python script sent in as cmd string. |

| 7 |

File Run Python script from given file. |

Script from the Command-line

A Python script can be executed at the command line by invoking the interpreter on your application, as in the following −

$python script.py # Unix/Linux

or,

python% script.py # Unix/Linux

or,

C:> python script.py # Windows/DOS

Note − Be sure the file permission mode allows execution.

Integrated Development Environment

You can run Python from a Graphical User Interface (GUI) environment as well, if you have a GUI application on your system that supports Python.

-

Unix − IDLE is the very first Unix IDE for Python.

-

Windows − PythonWin is the first Windows interface for Python and is an IDE with a GUI.

-

Macintosh − The Macintosh version of Python along with the IDLE IDE is available from the main website, downloadable as either MacBinary or BinHex’d files.

If you are not able to set up the environment properly, then you can take help from your system admin. Make sure the Python environment is properly set up and working perfectly fine.

We can also use another Python platform called Anaconda. It includes hundreds of popular data science packages and the conda package and virtual environment manager for Windows, Linux and MacOS. You can download it as per your operating system from the link https://www.anaconda.com/download/.

For this tutorial we are using Python 3.6.3 version on MS Windows.

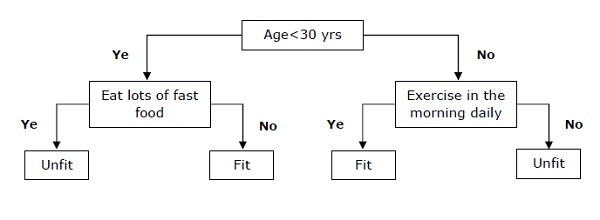

AI with Python – Machine Learning

Learning means the acquisition of knowledge or skills through study or experience. Based on this, we can define machine learning (ML) as follows −

It may be defined as the field of computer science, more specifically an application of artificial intelligence, which provides computer systems the ability to learn with data and improve from experience without being explicitly programmed.

Basically, the main focus of machine learning is to allow the computers learn automatically without human intervention. Now the question arises that how such learning can be started and done? It can be started with the observations of data. The data can be some examples, instruction or some direct experiences too. Then on the basis of this input, machine makes better decision by looking for some patterns in data.

Types of Machine Learning (ML)

Machine Learning Algorithms helps computer system learn without being explicitly programmed. These algorithms are categorized into supervised or unsupervised. Let us now see a few algorithms −

Supervised machine learning algorithms

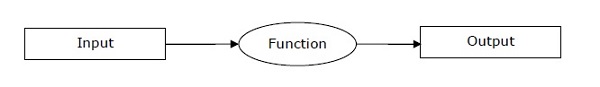

This is the most commonly used machine learning algorithm. It is called supervised because the process of algorithm learning from the training dataset can be thought of as a teacher supervising the learning process. In this kind of ML algorithm, the possible outcomes are already known and training data is also labeled with correct answers. It can be understood as follows −

Suppose we have input variables x and an output variable y and we applied an algorithm to learn the mapping function from the input to output such as −

Y = f(x)

Now, the main goal is to approximate the mapping function so well that when we have new input data (x), we can predict the output variable (Y) for that data.

Mainly supervised leaning problems can be divided into the following two kinds of problems −

-

Classification − A problem is called classification problem when we have the categorized output such as “black”, “teaching”, “non-teaching”, etc.

-

Regression − A problem is called regression problem when we have the real value output such as “distance”, “kilogram”, etc.

Decision tree, random forest, knn, logistic regression are the examples of supervised machine learning algorithms.

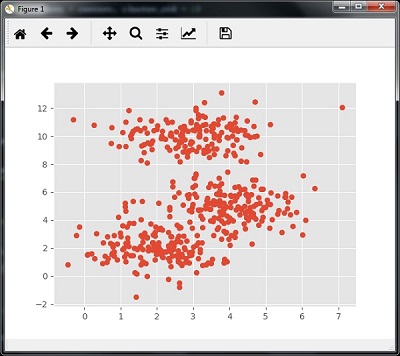

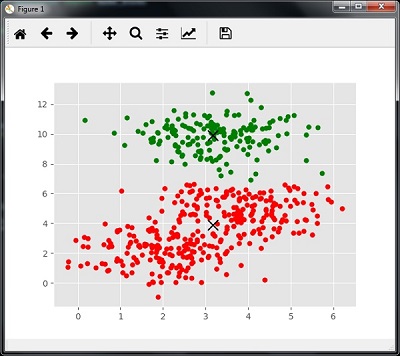

Unsupervised machine learning algorithms

As the name suggests, these kinds of machine learning algorithms do not have any supervisor to provide any sort of guidance. That is why unsupervised machine learning algorithms are closely aligned with what some call true artificial intelligence. It can be understood as follows −

Suppose we have input variable x, then there will be no corresponding output variables as there is in supervised learning algorithms.

In simple words, we can say that in unsupervised learning there will be no correct answer and no teacher for the guidance. Algorithms help to discover interesting patterns in data.

Unsupervised learning problems can be divided into the following two kinds of problem −

-

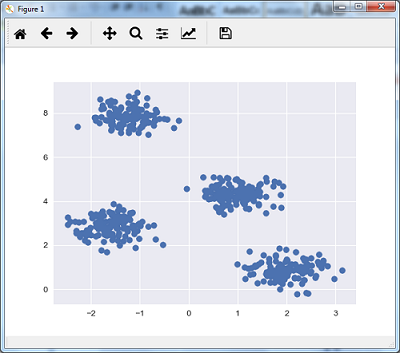

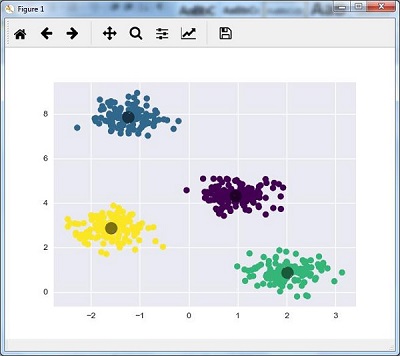

Clustering − In clustering problems, we need to discover the inherent groupings in the data. For example, grouping customers by their purchasing behavior.

-

Association − A problem is called association problem because such kinds of problem require discovering the rules that describe large portions of our data. For example, finding the customers who buy both x and y.

K-means for clustering, Apriori algorithm for association are the examples of unsupervised machine learning algorithms.

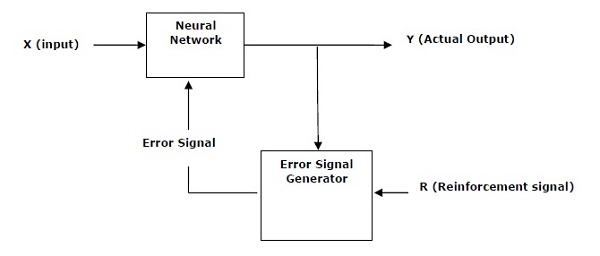

Reinforcement machine learning algorithms

These kinds of machine learning algorithms are used very less. These algorithms train the systems to make specific decisions. Basically, the machine is exposed to an environment where it trains itself continually using the trial and error method. These algorithms learn from past experience and tries to capture the best possible knowledge to make accurate decisions. Markov Decision Process is an example of reinforcement machine learning algorithms.

Most Common Machine Learning Algorithms